Hey everyone,

I am trying to do depth to color image registration on some recorded video (recorded with the Android API). My troubles and complaints are as follows:

- The use of

this.mDevice.setImageRegistrationMode(ImageRegistrationMode.DEPTH_TO_COLOR);crashes the application, althoughthis.mDevice.isImageRegistrationModeSupported(ImageRegistrationMode.DEPTH_TO_COLOR)returnsTrue. (It complains about setting property 5 failed). I inspected the C++ API and found that the depth registration sets property 101 on the depth stream, instead of the device. So I tried to implement this in Android, but it seems to not change anything.

...

public void setDepthRegistration() {NativeMethods.oniStreamSetProperty(getHandle(),101,true);}

...

this.depthStream.setDepthRegistration();

- Since the integration depth registration failed, I tried to read the camera parameters and use opencv to do StereoRectify. Camera parameters from the Orbbec API for my Astra Mini S:

vid 2bc5, pid 0407

Mirrored : no

[IR Camera Intrinsic]

578.938 578.938 318.496 251.533

[RGB Camera Intrinsic]

517.138 517.138 319.184 229.077

[Rotate Matrix]

1.000 -0.003 0.002

0.003 1.000 0.005

-0.002 -0.005 1.000

[Translate Matrix]

-25.097 0.288 -1.118

[IR Camera Distorted Params ]

-0.094342 0.290512 -0.299526 -0.000318 -0.000279

[RGB Camera Distorted Params]

0.044356 -0.174023 0.077324 0.001794 -0.003853

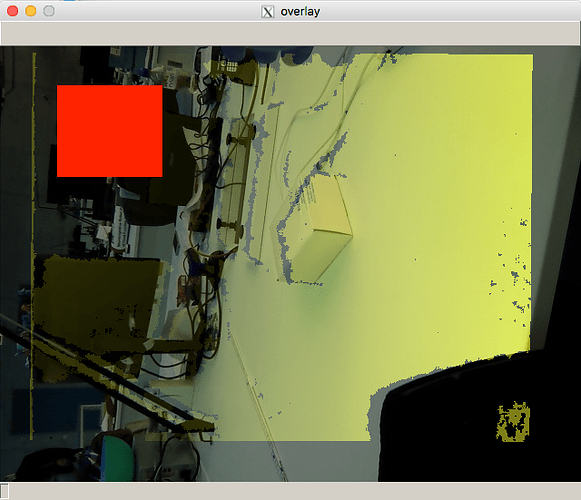

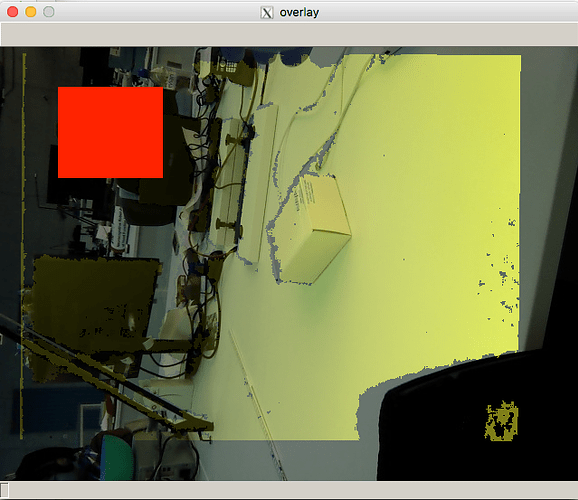

A minimal script to transform and display the attached example images, which produces the attached example output:

import cv2

import numpy as np

w=640

h=480

# Camera Params

fx_d = 578.938

fy_d = 578.938

cx_d = 318.496

cy_d = 251.533

k1_d = -0.094342

k2_d = 0.290512

p1_d = -0.299526

p2_d = -0.000318

k3_d = -0.000279

cam_matrix_d = np.array([

[fx_d,0,cx_d],

[0,fy_d,cy_d],

[0,0,1]

])

dist_d = np.array([ k1_d, k2_d, p1_d, p2_d, k3_d])

fx_rgb = 517.138

fy_rgb = 517.138

cx_rgb = 319.184

cy_rgb = 229.077

k1_rgb = 0.044356

k2_rgb = -0.174023

p1_rgb = 0.077324

p2_rgb = 0.001794

k3_rgb = -0.003853

cam_matrix_rgb = np.array([

[fx_rgb,0,cx_rgb],

[0,fy_rgb,cy_rgb],

[0,0,1]

])

dist_rgb = np.array([k1_rgb, k2_rgb, p1_rgb, p2_rgb,k3_rgb ])

RR = np.array([

[1, -0.003 , 0.002],

[0.003,1,0.005],

[-0.002,-0.005,1]

])

RR2d = np.array([

[1, -0.003 ],

[0.003,1]

])

TT = np.array([-25.097, 0.288, -1.118])

R_d,R_rgb,P_d,P_rgb,_,_,_ = cv2.stereoRectify(cam_matrix_d,dist_d,cam_matrix_rgb,dist_rgb,(w,h),RR,TT,None,None,None,None,None,cv2.CALIB_ZERO_DISPARITY)

map_d1,map_d2=cv2.initUndistortRectifyMap(cam_matrix_d,dist_d,R_d,P_d,(w,h),cv2.CV_32FC1)

map_rgb1,map_rgb2=cv2.initUndistortRectifyMap(cam_matrix_rgb,dist_rgb,R_rgb,P_rgb,(w,h),cv2.CV_32FC1)

# Read images

depth_img = cv2.imread('depth1.png')

rgb_img = cv2.imread('rgb1.png')

# Remap to RGB

depth_img_corr = cv2.remap(depth_img,map_d1,map_d2,cv2.INTER_LINEAR)

rgb_img_corr = cv2.remap(rgb_img, map_rgb1, map_rgb2, cv2.INTER_LINEAR)

# Create some kind of overlay for visualization

overlay_img = rgb_img_corr.copy()

alpha = 0.8

cv2.addWeighted(depth_img_corr, alpha, overlay_img, 1 - alpha, 0, overlay_img)

cv2.imshow("rgb_orig", rgb_img)

cv2.imshow("depth_orig", depth_img)

cv2.imshow("rgb_corr", rgb_img_corr)

cv2.imshow("depth_corr", depth_img_corr)

cv2.imshow("overlay", overlay_img)

cv2.waitKey(34)

while True:

pass

Something is clearly wrong here. The original images aren’t that distorted, and also aren’t that far away from each other (the rotation matrix is almost the identity). What am I doing wrong?

- There is an option for depth color synchronization in Openni,

this.mDevice.setDepthColorSyncEnabled(true);which doesn’t crash my device, but also doesn’t seem to do anything. Is there a way to enable this for Android? Or are both cameras just free running and I should just hope the corresponding depth and rgb frames are temporally close enough?

I’m hoping someone (maybe @Jackson since you’ve been very helpful with this before) can help with this. I don’t think I can switch to the new Astra SDK beta because it doesn’t seem there is a convenient way to record data (and I also don’t want to open another can of worms, when the depth registration is the last piece I need). Example images are attached.

Thanks for reading/ helping!