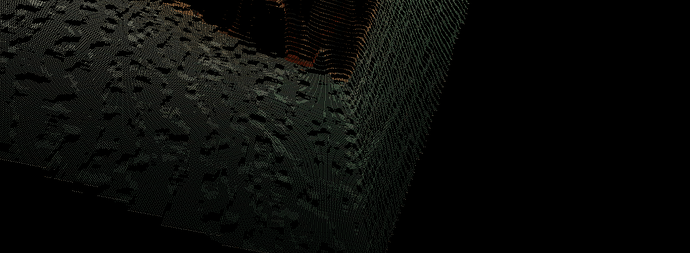

After looking at the point clouds generated using the Astra Pro, I noticed that my depth measurements look a bit quantized. As can be seen in the two attached pcl_viewer screenshots of my bedroom walls, you can see a grid-like pattern - quantized along the depth axis. I ensured that I’m using the 16 bit depth values from astra::DepthFrame, and I’m converting those 16 bit values directly to float for my point cloud.

From my inspection of the Orbbec.ini config file in the OpenNI orbbec-dev branch, I found the “InputFormat” setting which allows for Uncompressed and Packed 11-bit settings. By default, the 11-bit packed setting is used, and when I changed to the Uncompressed setting, my Astra Pro does not stream.

First, is the Orbbec.ini setting I found relevant to the quantization I’m observing? Is the Astra Pro firmware quantizing its depth measurements?

Second, any ideas for what I can do to reduce or remove this quantization artifact?

Thanks!

Since it’s not entirely clear from the screenshots, I figured I would make some histogram plots of the actual data coming from the data() function of the astra::DepthFrame object.

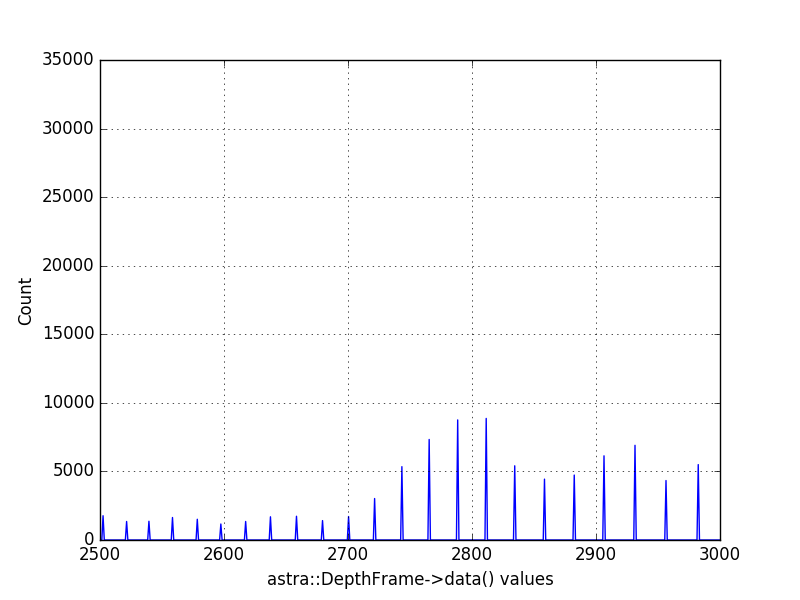

Here is a histogram of the entire range of uint16_t that I observe from capturing data from my bedroom walls.

Here is a closeup on the 2500-3000 millimeter range:

As you can see, the values from the DepthFrame itself are clearly quantized to steps of 25-ish millimeters at just 3 meters away. I’m initializing my depthstream using the following lines of code:

astra::initialize();

astra::StreamSet* mStreamSet;

mStreamSet = new astra::StreamSet();

astra::StreamReader reader = mStreamSet->create_reader();

astra::DepthStream depth = reader.streamastra::DepthStream();

astra::ImageStreamMode depthMode(640, 480, 30, astra_pixel_formats::ASTRA_PIXEL_FORMAT_DEPTH_MM);

depth.set_mode(depthMode);

depth.enable_mirroring(false);

depth.enable_registration(true);

//Grab the frame

astra::Frame frame = reader.get_latest_frame();

const auto depthFrame = frame.getastra::DepthFrame();

//get the data

int16_t *depth_values = (int16_t *)depthFrame->data();

Note that the above code is copy/pasted from a larger project, so I gathered specifically the Astra-interfacing code.

I know that with the Primesense/Kinect cameras the depth value per bit is non-linear and gets larger with greater distance, so this does not look unexpected in concept; whether 25mm at 3M is reasonable for this camera is another question. But resolution will get worse with distance.

And another factor that can be coming into play, is if your orientation to the wall is at a slant, with greater distance the depth points are spaced further apart just due to angular spread.