Hello!

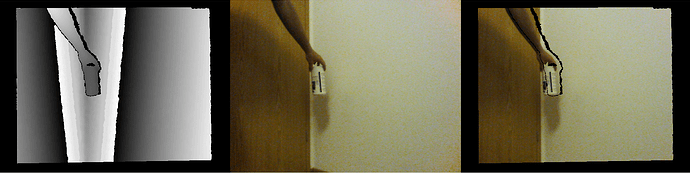

I am trying to collect a small dataset for a depth estimation problem using Orbbec Astra. I’ve put together some code to capture both RGB and depth image, however when comparing them I’ve noticed that they are not aligned in the horizontal axes as seen in attached image. I noticed that the farther the object is from camera, the greater the difference in aligment.

I don’t know how to proceed to solve this. I would like them to align pixel by pixel or is something like this not possible to achieve.

Any help is much appreciated. Thank you in advance!