I’ve been working on a software that perform blob detection using the point clouds from Kinect and I’m working on updating it to work with the latest Orbbec cameras (Femto Bolt and Femto Mega)

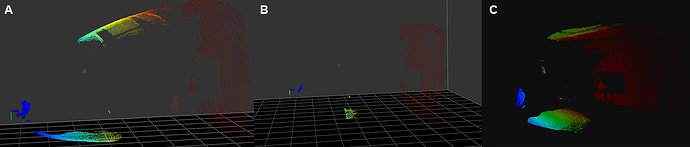

I started to get the point cloud from the wide field of view (512 x 512) and when I do, the point cloud is distorted and not properly aligned with real world coordinates.

(A in the image)

To remedy that, Orbbec has a mode to align the depth points with the color camera view (D2C). However, the points that are not within the camera view are discarded, rendering the use of the wide FoV texture useless because a big part of the data is lost.

(B in the image)

I was wondering if you’ve seen a way to undistort the point cloud based on world coordinates, like the Azure Kinect SDK had (C in the image), and not based on the RGB camera view? Should I just use the K4A wrapper instead of the Orbbec SDK?

Thank you!