Don’t know if its still relevant, but I’ve been working with these cams lately as part of my job and found out that in order to match the RGB and DEPTH stream of the device you have to find 3 constants (which are different for each device):

- scaling factor

- x-axis offset

- y-axis offset

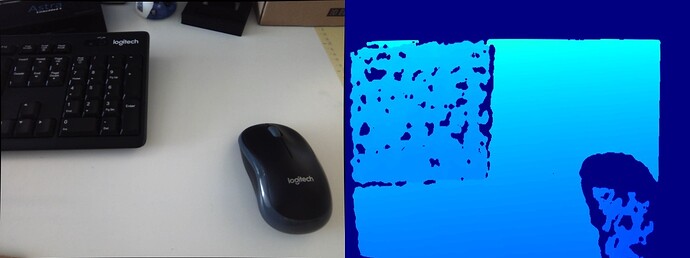

In order to find them using python place an object with clear edges in from of the device (might need to adjust the distance to get better accuracy) and take a picture with both cameras.

After doing so, find the contours of your object in the RGB image using OpenCV

the method that worked best for me is using canny edge detecting, converting to binary, and then using the method used in the following article to get the mask of your object Filling holes in an image using OpenCV.)

after getting the mask use the following code to get the area and center of the shape:

contours, heirarchy = cv2.findContours(image=mat, mode=cv2.RETR_TREE, method=cv2.CHAIN_APPROX_SIMPLE)

cont = contours[0]

area = cv2.contourArea(cont)

M = cv2.moments(cont)

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

In order to extract your object from the depth image I suggest to either use the fact that the value of pixels around edges is 0, you can dilate and erode to get better results. Another option is to filter out everything that isn’t within a certain range. You can also combine the two.

After getting the mask of your object, use the same code from before to get the area and center of the shape from the depth image.

After calculating the area and center of your object in both images, use the following code to perform the transformation:

def shift(mat, dx, dy):

new = cv2.copyMakeBorder(mat, 0 if dy < 0 else dy, 0 if dy > 0 else -dy, 0 if dx < 0 else dx, 0 if dx > 0 else -dx,

cv2.BORDER_CONSTANT, value=[0, 0, 0])

new = new[:, :-dx] if dx > 0 else new[:, -dx:]

new = new[:-dy, :] if dy > 0 else new[-dy:, :]

return new

def zoom_at(img, x, y, ratio):

scaled = cv2.resize(img, (0, 0), fx=ratio, fy=ratio)

canvas = np.zeros_like(img)

dx = int(x * (1 - ratio))

dy = int(y * (1 - ratio))

canvas[dy:dy + scaled.shape[0], dx:dx + scaled.shape[1]] = scaled

return canvas

scale_ratio = math.sqrt(scale_rgb / scale_depth)

x_diff, y_diff = center_x_rgb - center_x_depth, center_y_rgb - center_y_depth

matching_depth = shift(depth_image, x_diff, y_diff)

matching_depth = zoom_at(matching_depth , center_x_rgb, center_y_rgb, scale_ratio)